Machine Learning in HEOR: Are We at the Final Frontier?

Chintal H. Shah, MS, BPharm, University of Maryland, Baltimore, MD, USA

Machine learning methods have been around for decades, but its application was limited given the computational challenges it poses. The emergence of affordable and powerful computers and tools, combined with the rise in “big data,” has led to rapid developments in the application of machine learning. These developments have been extended to healthcare research as well and today’s session highlighted some of the latest research on machine learning in HEOR. This makes us wonder, are we close to the final frontier in the widespread application of machine learning in HEOR?

Woojung Lee (University of Washington, USA) presented research titled, “A Scoping Review of the Use of Machine Learning in Health Economics and Outcomes Research: Part 1 - Data from Wearable Devices,” wherein she and her team explored the emerging patterns in the utilization of machine learning on data that has been collected from wearables in the HEOR domain. To identify these patterns, they carried out a scoping review of published studies in PubMed between January 2016 through March 2021. In the 148 studies that were identified, a vast majority (~80%) of the machine learning usage was to monitor events in real time (versus predicting future outcomes), and outcomes evaluated varied from general physical or mental health (45%) to more disease-specific outcomes, such as disease incidence (18%) and disease progression (15%). Further, the authors found that most of the devices used were wearable health devices (as opposed to smart watches and smart phones), while the most common technique used were tree-based methods. The biggest challenges were found to be data quality, selection bias, security, and privacy. Assessment of the quality of included studies, inclusion of computer scientists, and computer science specific databases was out of the scope of this research, but future studies may explore these domains further. Lee concluded that, based on their findings, there is potential for wearable devices to provide rich data for machine learning studies in HEOR.

Dongzhe Hong (Tulane University, USA) presented on a retrospective study he carried out with coauthors wherein they compared the performance of an machine learning-driven EHR-based prediction model that was tailored to the population of interest against heart failure risk equations (ie, the Risk Equations for Complications Of type 2 Diabetes (RECODe) risk equation; and the Building, Relating, Assessing, and Validating Outcomes (BRAVO) risk equation in patients with Type 2 diabetes (T2D). The tailored model (henceforth referred to as “Ochsner Heart Failure risk model”) was based on Cox-proportional hazard models followed by LASSO regression for variable selection (Figure). LASSO regulation was selected as it is a well-performing and validated method. The Ochsner Heart Failure risk model, BRAVO, and RECODe shared 3 common factors (Figure 1) and the Ochsner model was found to have better internal discrimination that the other 2 models. This highlighted the role for tailored equations in risk prediction models. Some factors such as education level and smoking status were not available. A limitation of the data was >50% missing information on Hba1c. This research concluded that EHR and machine learning methods can be used to develop locally fitted models, and that researchers should be cautious about the results of the “generalized” models.

Figure 1. Model development and assessment of model performance.

Jieni Li (University of Houston, USA) presented on her team’s research titled, ”Machine Learning Approaches to Evaluate Treatment Switching in Patients With Multiple Sclerosis: Analyses of Electronic Medical Records.” TrinetX data was used to answer this question. The Andersen behavioral model was used to inform variable selection. Logistic regression (LR), Least Absolute Shrinkage and Selection Operator regression (LASSO), random forests (RF), and extreme gradient boosting (XGBoost) were used to develop prediction models with 72 baseline variables. Models were trained using 70% of the randomly split data with the up-sampling methods and hyperparameters were determined based on 10-fold cross validation. No missing value imputation was carried out, and missing values were categorized as a separate category. The RF model achieved the best model performance, while other models were comparable, and the authors concluded that though the RF model achieved the best performance, more work is needed to understand the role of ML approaches in optimal treatment selection to provide individualized care.

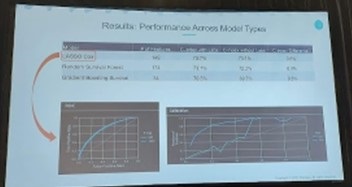

Zeynep Icten, PhD (Data Science Solutions at Panalgo, USA) presented on work she and her colleagues carried out (“Predictors of Major Adverse Cardiovascular Events Among Type 2 Diabetes Mellitus Patients: A Machine Learning Time-to-Event Analysis”) wherein they identified predictors of major adverse cardiovascular events (MACE) in patients with type to diabetes mellitus (T2DM). The Optum® Integrated Electronic Health Records and Claims database was utilized and regularized Cox proportional hazards regression (RegCox), random survival forest, and gradient boosting survival machine learning-based models were examined for a wide variety of 240 features (Figure 2). One of the novel aspects of this study was that it used machine learningfor a relatively less commonly seen purpose: ‘time-to-analyses. RegCox was the best performing model (based on the C-index, see figure) and based on this model, features associated with increasing MACE risk included older age, higher Charlson score, systolic blood pressure, total cholesterol, number of comorbidities, lower HDL, along with use of furosemide and insulin glargine. Commercial insurance, being from the West, being female, and having never smoked were associated with decreasing risk. It was pointed out that the Xboost model had the least features for only a minimal cost in prediction and may be the most applicable method in a real-world setting, and this study was a great example of using machine learning in a “time-to-event” analyses.

Figure 2. Performance across model types.

These sessions, presented in the black-box representing HEOR theatre in the exhibit hall, demonstrated how machine learning need not be a black-box technique and can be used to identify important predictors for various health outcomes!