Leveraging ePRO Paradata for Patient-Centered Trial Designs

Andrzej Nowojewski, PhD, AstraZeneca, Cambridge, England, UK; Erik Bark, BSc, AstraZeneca, Gothenburg, Sweden; Vivian H. Shih, DrPH, and Sean O’Quinn, MPH, AstraZeneca, Gaithersburg, MD, USA; Richard Dearden, PhD, AstraZeneca, Cambridge, England, UK

Introduction

Patient-reported outcome (PRO) instruments play a vital role in the drug development process by gathering valuable data directly from patients, capturing their perspectives on symptoms, functioning, and overall health-related quality of life.1 Typically, these data are collected through validated self-reported questionnaires. Nowadays, electronic PROs (ePROs) have become a standard, utilizing devices like tablets or smartphones.2 This is driven by growing evidence that ePROs offer benefits such as improved adherence,3 reliability, and reduced secondary data entry errors4 compared to traditional pen-and-paper methods.

However, ePRO devices offer more than just the ability to collect patient responses. They also gather additional data known as paradata, which include information about the data collection process itself. Paradata can include details such as timestamps indicating when patients started and finished the questionnaire, login attempts, and device power levels. Although the exploration of such datasets in health economics and outcomes research literature has been limited, recent work has highlighted their potential value.5 Analyzing paradata can offer insights into patients’ interactions with ePRO devices and PROs, leading to improvements in patient-centric clinical study designs and a better measure for the amount of effort that patients must dedicate to complete PROs. Without requiring additional work from the patient themselves, these patient-centric analyses can contribute to our understanding of the respondent burden in clinical trials.6

"Patients who demonstrated higher adherence prior to randomization maintained higher adherence levels to the end of the trial."

Methods

We analyzed data from the OSTRO study (NCT034012297), a phase III, interventional, randomized, placebo-controlled, double-blind, multicenter, longitudinal, respiratory clinical trial conducted between 2017 and 2020 investigating benralizumab in patients with nasal polyposis.

The study included a PRO measure, the Nasal Polyposis Symptom Diary (NPSD),8 which was completed every morning for up to 80 weeks (including the 56-week–long treatment period) on an electronic device that captured the start and end time of each completed assessment. The 360 patients included in this analysis have completed over 162 thousand daily NPSDs and spent almost 100 person-days of collective effort.

To identify factors driving patient adherence and response time (ie, the time that it took a patient to complete the daily NPSD), we used general linear mixed-effects models to account for the correlated nature of the data coming from the same patient, site, and country.

Findings

Prerandomization adherence is a strong indication of subsequent adherence

In the OSTRO study, the NPSD supported coprimary and multiple secondary endpoints, which were all analyzed as changes from baseline variables. Before enrolling in the study, patients had to meet a minimum adherence requirement for the NPSD during the 14 days leading up to the randomization visit in order to generate sufficient baseline data. Patients were aware of this expectation and agreed to be adherent by signing informed consent forms. This criterion did not introduce any statistically significant bias in the baseline characteristics of the population other than adherence.

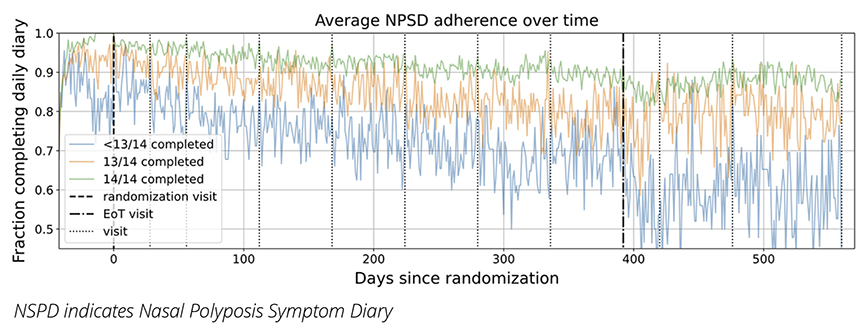

We aimed to determine if the level of adherence to the NPSD before randomization could predict patient adherence throughout the trial. Patients who completed the NPSD every day in the 2 weeks preceding randomization were considerably more likely to maintain higher adherence throughout the trial. This finding was supported by a multivariable model that adjusted for patient characteristics (such as age, ethnicity, sex), research site, and country, resulting in an odds ratio of 2.43 (99.9% CI: 2.31-2.62). To illustrate this finding, Figure 1 shows the average adherence levels throughout the trial for different patient groups categorized by their pre-randomization adherence. The cohorts were divided into 3 groups: (1) those who completed the NPSD for all 14 days, (2) those who completed 13, and (3) those who completed fewer than 13. Although adherence decreased over time for all groups, patients who demonstrated higher adherence prior to randomization maintained, on average, higher adherence levels to the end of the trial.

This finding supports using prerandomization adherence as an eligibility criterion for trial enrollment to ensure a high level of PRO completion rates, which is especially important when that PRO supports key endpoints in the study.

Figure 1: Average NPSD adherence over time of all the patients (325 patients) who completed the OSTRO study divided into 3 equally-sized groups by their pre-randomization adherence (ie, how many times the patient completed the NPSD in the 2 weeks prior to randomization visit). All patients are time indexed to the randomization visit. The dotted lines show planned visits according to the protocol but the actual visit date for each patient may vary up to +/- 7 days post-randomization.

Patient completion time decreases with experience and increases with age

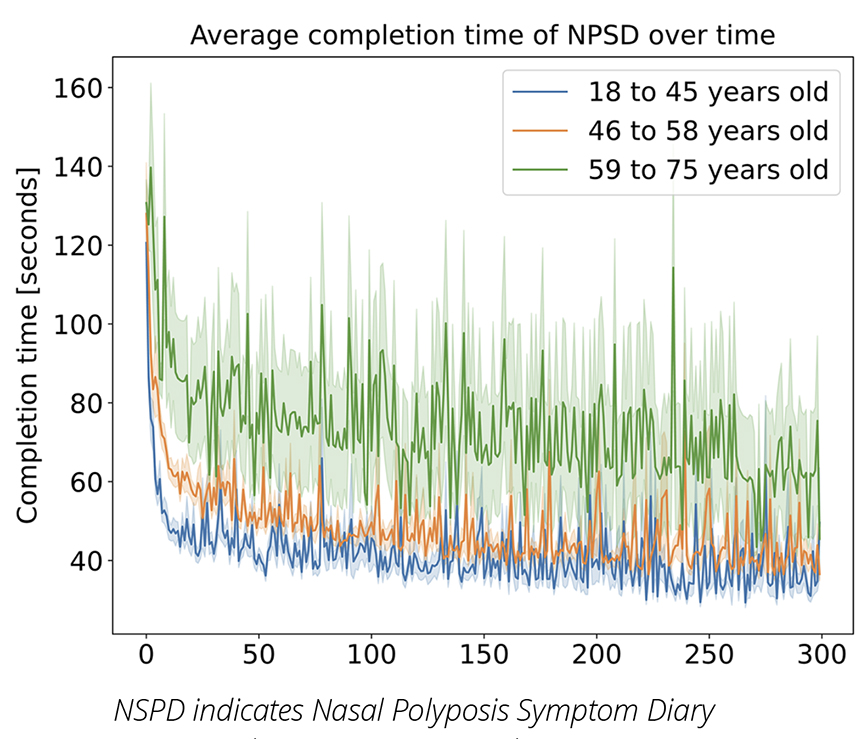

The paradata collected from ePRO devices offer valuable insights into the effort patients invest in completing PROs. By analyzing the start and end timestamps of the NPSD, we discovered that both patient age and previous experience with the tool had a notable impact on completion time. Figure 2 demonstrates these findings, indicating that response time decreased rapidly for all age groups (divided into equally sized quantiles by age) across the first 30 days of NPSD completion. The level of decrease continued but at a more moderate rate throughout the remainder of the trial. Our analysis also revealed that older patients took significantly longer to complete the PRO, with the patients in the older cohort taking twice as long than the patients in the younger cohort.

Figure 2: Average completion time (and standard error of the mean shown as a shaded area) of NPSD for OSTRO patients divided into 3 equally-sized groups by age.

The paradata alone cannot explain why we see such patterns. Older patients may find dealing with ePRO devices more challenging, which would explain why they take more time completing the PROs, but our findings are also in line with previous research that shows that older people may read at a slower rate.9 The quick decrease in response time from the beginning of the study until the end (which is especially rapid in the first month) may be explained by independent educational research findings on repeat reading that showed the exposure to the same text on multiple occasions not only increased the reading speed but also improved reader comprehension.10 Further research will be required to find the comprehensive explanation of these trends.

Alerts and reminders drive ePRO response behavior

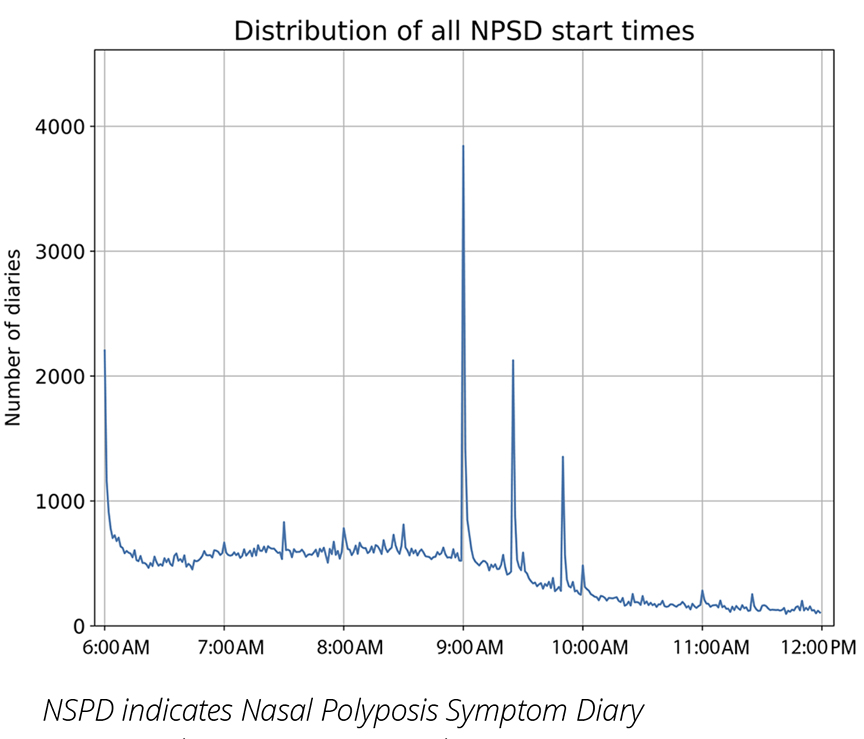

We can also use the PRO start timestamps to analyze how PRO completions are distributed over the daily response window. Figure 3 displays the start times for NPSD in the OSTRO trial during the 6-hour morning window. The prominent spikes at 9:00 AM, 9:25 AM, and 9:50 AM correspond to the initial default reminder setting on the device and the two 25-minute snooze options, respectively. Patients also had the freedom to modify the default reminder time, which might explain the presence of other spikes.

Data collected from a large cohort of diverse patients like these could guide clinical study teams to design better alert and reminder systems tailored to specific populations.

Figure 3: Number of NPSDs that were started in every minute of the morning window across the entire OSTRO trial (only treatment period included). The 9:00 AM spike shows potential reminder alert effects followed by the default 25-minute snooze button effects.

Conclusions and Outlook

Analyzing adherence and timestamps paradata from ePRO devices in large longitudinal clinical trials offers valuable insights into patient behavior that would be challenging to obtain otherwise. These devices have the potential to provide a wealth of additional raw data that can be explored for further behavioral insights.

While some patient populations may face more challenges using ePRO devices compared to pen-and-paper, with ePRO we can continuously monitor signs of problematic patient-device interactions such as failed login attempts, devices running out of power, or PROs timing out. If we notice an unusually high number of such events with a specific device or at a particular site, it could suggest that a patient is struggling with technological challenges or that the site requires additional training and resources, respectively. By retrospectively analyzing these data from multiple studies, we can identify trends at a population level and improve future ePRO device setup to anticipate and prepare for such issues before a clinical trial begins. One of the main challenges is that ePRO device providers rarely collect and share this kind of paradata with sponsors by default. To facilitate this work in the future, clinical trial sponsors should require more comprehensive data collection and sharing agreements in their trials.

Furthermore, we could leverage ePRO paradata to design better PROs. If the device records the time taken by patients to complete each PRO and tracks their journey through the PRO (including detailed logs of their answers), it can offer a convenient way to identify cognitively challenging questions. While these types of data may not be currently collected by default, there are no technical obstacles preventing it from doing so. Such analyses would empower clinical trial sponsors, ePRO device providers, and PRO developers with actionable patient behavior insights that can be used to enhance the patient experience without requiring any additional effort from the patients themselves.

References

1. Kyte D, Ives J, Draper H, Calvert M. Current practices in patient-reported outcome (PRO) data collection in clinical trials: a cross-sectional survey of UK trial staff and management. BMJ Open. 2016;6(10):e012281. doi:10.1136/bmjopen-2016-012281

2. Coons SJ, Eremenco S, Lundy JJ, O’Donohoe P, O’Gorman H, Malizia W. Capturing patient-reported outcome (pro) data electronically: the past, present, and promise of ePRO measurement in clinical trials. The Patient. 2015;8(4):301-309. doi:10.1007/s40271-014-0090-z

3. McKenzie S, Paty J, Grogan D, et al. Proving the eDiary dividend. Appl Clin Trials. 2004;13:54-69.

4. Abrams P, Paty J, Martina R, et al. Electronic bladder diaries of differing duration versus a paper diary for data collection in overactive bladder. Neurourol Urodyn. 2016;35(6):743-749. doi:10.1002/nau.22800

5. Nowojewski A, Bark E, Shih VH, Dearden R. What can we learn from one million completed daily questionnaires about patient compliance and burden? Value in Health. Vol 25;2022.

6. Aiyegbusi OL, Roydhouse J, Rivera SC, et al. Key considerations to reduce or address respondent burden in patient-reported outcome (PRO) data collection. Nat Commun. 2022;13(1):6026. doi:10.1038/s41467-022-33826-4

7. Bachert C, Han JK, Desrosiers MY, et al. Efficacy and safety of benralizumab in chronic rhinosinusitis with nasal polyps: a randomized, placebo-controlled trial. J Allergy Clin Immunol. 2022;149(4):1309-1317.e12. doi:10.1016/j.jaci.2021.08.030

8. O’Quinn S, Shih VH, Martin UJ, et al. Measuring the patient experience of chronic rhinosinusitis with nasal polyposis: qualitative development of a novel symptom diary. Int Forum Allergy Rhinol. 2022;12(8):996-1005. doi:10.1002/alr.22952

9. Brysbaert M. How many words do we read per minute? A review and meta-analysis of reading rate. Ournal Mem Lang. 109:104047.

10. Therrien WJ. Fluency and comprehension gains as a result of repeated reading: a meta-analysis. Remedial Spec Educ. 2004;25(4).