The Promise and Biases of Prediction Algorithms in Health Economics and Outcomes Research

Sara Khor, MASc; Aasthaa Bansal, PhD, The Comparative Health Outcomes, Policy, and Economics Institute, University of Washington School of Pharmacy, Seattle WA, USA

The Promise of Prediction Algorithms

There is growing interest in developing prediction algorithms for healthcare and health economics applications. “Big data” are becoming increasingly available with the integration of electronic health records and the standardization of administrative health databases. More recently, the development of genome informatics and personal health monitoring wearables such as smart watches and GPS trackers further pushes the boundaries of health data, the complexity and volume of which often exceed the capacity of the human brain to comprehend. Machine learning methods and advanced analytical tools enable the meaningful processing of these treasure troves of data, promising to uncover insights from these data that may be hidden from the human eyes and to provide higher quality and more efficient care using personalized diagnoses and treatments based on collective data and knowledge.

“Smart algorithms can also be incorporated into health systems to reduce medical errors, recommend appropriate care, or identify patients who will derive the greatest benefit from population health programs.”

Algorithms that predict risks of an event, such as disease diagnosis or progression, or death, are frequently found in the clinical literature. These tools can be used in shared decision making to facilitate clinician–patient communication on risks and guide decisions regarding testing and treatment. Smart algorithms can also be incorporated into health systems to reduce medical errors, recommend appropriate care, or identify patients who will derive the greatest benefit from population health programs.1,2 Predictive models also have been used for forecasting health spending and risk adjustment of health plan payments to facilitate the allocation of appropriate healthcare funding according to individuals’ risks.3,4 By applying appropriate risk adjustment, health plans and health organizations are incentivized to provide high quality and efficient care while disincentivized from “cherry-picking” healthier individuals.

In addition to increasing processing power and efficiency, there is also hope that the use of algorithms can reduce the conscious and unconscious biases of human decisions. Logical automated algorithms, sometimes perceived as being free from human interference, are believed to have the potential to produce decisions that are devoid of human flaws and biases.

Defining the Problem

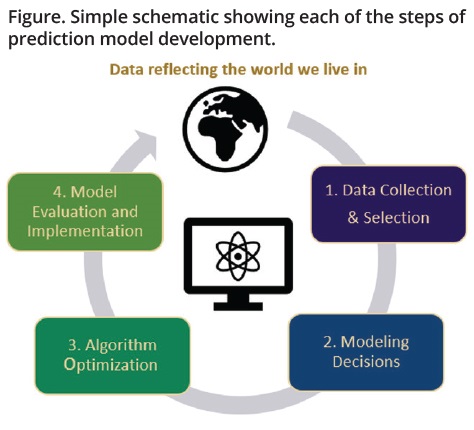

In reality, algorithms are often far from being neutral. In fact, because algorithms are developed by humans using data reflecting human behavior, biases can exist at every stage of their development (see Figure). From (1) the collection of raw data (who is and who is not using the health system? Whose data are being captured in the electronic health data records?) and the selection of the model training dataset (who is excluded from the training data?) to (2) making modeling decisions around model inputs and structures, to (3) choosing what metrics to optimize the algorithms on, and finally, to (4) deciding on how to evaluate and implement the model once it is developed, bias can be in each of these steps because human decisions are at every stage. Even if the above steps are perfect, models are trained to mimic the historical data that reflect the world we live in, which is plagued by structural racism and inequality. When algorithms are trained using biased data that are encoded with racial, gender, cultural, and political biases, the results may perpetuate or amplify existing discriminations and inequities.

Figure. Simple schematic showing each of the steps of prediction model development.

Awareness of Bias

Seemingly well-performing algorithms built using biased data or inappropriate methods can lead to erroneous or unsupported conclusions for certain populations. If these biased algorithms are used to make healthcare treatment or funding decisions, not only are the algorithms far from being neutral, they can further create, propagate, or amplify systemic biases and disparities in healthcare and health outcomes. Awareness of algorithmic bias in healthcare is growing and many investigations have led to calls for the removal or revision of several existing problematic clinical tools. One example is a widely used risk-prediction tool that large health systems and payers rely on to target patients for expensive “high-risk care management” programs.2 The tool used past healthcare costs as a proxy for health to estimate clinical risk but because White patients tended to have better and more frequent access to the health system and therefore higher healthcare expenditure compared to Black patients, the algorithm generated higher risk scores for White patients than for Black patients who had similar health status. Implications of such differential scores include fewer referrals for Black patients for care management programs, contributing further to racial healthcare disparities and spending discrepancies.

The Debate Around the Inclusion of Race in Prediction Algorithms

At the center of identifying, addressing, and preventing racial biases in algorithms is the need to understand the meaning of race and fairness. Race is a social construct and its effect on health reflects that of racism and inequities in socioeconomic, structural, institutional, cultural, and demographic factors. Currently there is a lack of consensus on how race and ethnicity should be included in prediction algorithms. Opponents to including race as a predictor in algorithms are concerned about racial profiling or that the use of individual features for decision making is inherently unfair—a concept sometimes referred to as “process fairness.”5 The use of a social construct as a predictor in algorithms may also erroneously suggest biological effects and condones false notions about biological inferiority among racial or ethnic minority groups. There are increasing concerns around race-based clinical decision making that can result in unequal treatment across racial groups, promote stereotyping, and shift attention and resources away from addressing the root causes of the disparities of illness such as structural inequities and social determinants of health.6

“When algorithms are trained using biased data that are encoded with racial, gender, cultural, and political biases, the results may perpetuate or amplify existing discriminations and inequities.”

These concerns around race-adjusted algorithms have led to calls for the removal of race in some existing clinical algorithms, such as the estimated glomerular filtration rate (eGFR) and the vaginal birth after cesarean (VBAC) calculators. The inclusion of race as a predictor in the eGFR algorithm resulted in higher eGFR values for Black patients, which suggested better kidney function.7 As a result, Black patients may be less likely than White individuals to be referred to a specialist or receive transplantation, further exacerbating the existing disparities in end-stage kidney diseases and deaths among Black patients. Similarly, the VBAC algorithm systematically produces lower estimates of VBAC success for African American or Hispanics, which may dissuade clinicians from offering trials of labor to people of color and contribute to the high maternal mortality rates among Black mothers.8 These tools have been widely criticized and in many institutions across the United States, the algorithms were revised to remove the adjustment for race.

“Black patients may be less likely than White individuals to be referred to a specialist or receive transplantation, further exacerbating the existing disparities in end-stage kidney diseases and deaths among Black patients.”

Should race be removed from all algorithms? And more importantly, does the removal of race as a predictor make the algorithms fair? Some have argued that in situations where accurate prognostication is the goal, for example, in algorithms that aim to guide individual care plans such as cancer surveillance intensity, not considering race can result in inaccurate prediction that can then lead to inappropriate allocation of treatment, which can also differentially harm racial or ethnicity minority groups. Additionally, the effects of race are often already encoded in other variables in the model. Without fully understanding and incorporating the drivers of the disparities in outcomes, researchers have argued that race-blind models that simply ignore race may not make the algorithms fair.

Colorectal Cancer Recurrence as a Case Study

To further understand the impact of the inclusion or exclusion of race or ethnicity as a predictor in clinical algorithms on model performance, we used colorectal cancer (CRC) recurrence as a case study. CRC recurrence risk models have been proposed to guide surveillance for recurrence for patients with cancer, the goal of which is to move past one-size-fits-all surveillance approaches and move towards personalized follow-up protocols based on prognostic markers. Patients identified with high risk of recurrence could be recommended for more intensive surveillance, and those with low risk could be recommended for less frequent active surveillance, which can reduce the costs and burden associated with unnecessary visits and tests. Using data from a large integrated healthcare system, we fitted 3 risk prediction models that estimated patients’ risk of recurrence after undergoing resection. One model excluded race/ethnicity as a predictor (“race-blind”); one included race/ethnicity (“race-sensitive”); and the third was a stratified model where separate models were built for each race/ethnicity subgroup.

According to standard performance measures based on sensitivity and specificity, such as the area under the ROC curve (AUC), the “race-blind” model performed well on the overall cohort (AUC=0.7) but had differential performance across racial subgroups (AUC ranging from 0.62-0.77).

Additionally, the model had lower sensitivities and higher false-negative rates among minority racial subgroups compared to non-Hispanic White individuals, suggesting that the model may be disproportionately missing more true cases in these minority racial subgroups. The implication of using such an algorithm for decision making is that more individuals in these minority racial subgroups who should be getting resources may not be receiving them. Interestingly, the inclusion of a predictor for race/ethnicity or the use of race stratification did not address the problem of differential performance across racial groups, further underscoring the need for caution when developing and using these algorithms for decision making. Careful consideration of the role of race in prediction models is essential but may not be sufficient, and the simple omission or inclusion of race may not fix the problem of algorithmic bias. It is important for those making or using prediction algorithms to assess and report how these models perform in subgroups to ensure that they are not contributing to health disparities.

Looking Ahead

Our case study showed that simply evaluating and reporting algorithm performance using a single metric (eg, AUC) in the overall sample can be misleading and may conceal the models’ differential performance across subpopulations. While our case study illustrated the unfairness in model performance across racial subgroups, it is important to point out that there is no universal definition of fairness and the choice of fairness criteria may depend on the context and decision makers’ value judgment.

Predictive algorithms have the potential to improve healthcare decisions and revolutionize payment models, but to fully harness their potential to improve healthcare and outcomes for all people, there is a need to build ethical considerations into the development and adoption of these models. The shift towards fair algorithms requires transparent and thoughtful model–building approaches and critical appraisal by deliberate users, including clinicians, health systems, researchers, and patients. By incorporating conscious antiracist approaches and engaging the broad community and diverse stakeholders, predictive algorithms carry the potential to fulfill their original promise—to harness data and knowledge to help us make decisions that could lead to better and fairer healthcare for all.

References

1. Corny J, Rajkumar A, Martin O, et al. A machine learning-based clinical decision support system to identify prescriptions with a high risk of medication error. J Am Med Inform Assoc. 2020;27(11):1688-1694.

2. Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366(6464):447-453.

3. Rose S. A machine learning framework for plan payment risk adjustment. Health Serv Res. 2016;51(6):2358-2374.

4. Navarro SM, Wang EY, Haeberle HS, et al. Machine learning and primary total knee arthroplasty: patient forecasting for a patient-specific payment model. J Arthroplasty. 2018;33(12):3617-3623.

5. Grgic-Hlaca N, Zafar MB, Gummadi K, Weller A. The Case for Process Fairness in Learning: Feature Selection for Fair Decision Making. Presented at Symposium on Machine Learning and the Law at the 29th Conference on Neural Information Processing Systems. 2016; Barcelona, Spain.

6. Vyas DA, Eisenstein LG, Jones DS. Hidden in plain sight—reconsidering the use of race correction in clinical algorithms. N Engl J Med. 2020;383(9):874-882.

7. Eneanya ND, Yang W, Reese PP. Reconsidering the consequences of using race to estimate kidney function. JAMA. 2019;322(2):113-114.

8. Vyas DA, Jones DS, Meadows AR, Diouf K, Nour NM, Schantz-Dunn J. Challenging the use of race in the vaginal birth after cesarean section calculator. Womens Health Issues. 2019;29(3):201-204.