Artificial Intelligence Is Leveling Up HEOR, but Still Needs a Humanity Check

By Beth Fand Incollingo

The evolution of artificial intelligence (AI) has rapidly accelerated in just the past few years, amplifying the potential impact of health economics and outcomes research (HEOR) on patients and public health. The technology is enabling HEOR professionals to do more complex analyses, drawing on vast amounts of data, faster than ever. But concerns remain about the accuracy and ethical use of AI for healthcare research and patient care, and HEOR experts say it’s essential for humans to retain oversight over AI-driven clinical decisions.

Two decades ago, AI’s role in HEOR involved using machine learning to support predictive modeling of health outcomes and costs,1-3 but in the past few years, the emergence of new AI tools has dramatically compounded those capabilities.

Today, HEOR professionals can aggregate and synthesize vast amounts of content using generative AI,4 or large-language models, which can “converse” with them via written exchanges, answer their questions, and even draft research papers and dossiers.5 The field has also started to adopt agentic AI,6 which works autonomously to gather and analyze data, set goals, make decisions, and even learn from those experiences.

AI’s promise in healthcare is immeasurable, from its current capacity to match patients with treatments and improve the diagnostic potential of medical imaging to a future vision of digital twins that will support individual health. The technology’s impact was recognized in 2024, when 3 scientists won the Nobel Prize in chemistry for AI-driven innovations that have changed our understanding of proteins, creating new avenues for drug discovery.7

While the latest iterations of AI are clearly benefiting HEOR professionals, they’re also raising concerns, such as how the technology can be responsibly used in research given that even the most extensive AI databases may be missing key information needed for clinical decision making, as well as the known risk that AI-generated responses may be biased or inaccurate.5 Although HEOR experts acknowledge that AI offers advantages too important to overlook, they caution that humans must remain involved in all the work AI touches to ensure that goals are achieved.

“We have to stop thinking of AI as a replacement and start thinking of it as an augmentation to our workflows,” said Harlen Hays, MPH, who oversees 3 research teams at Cardinal Health, a company that supplies products and insights to healthcare stakeholders. “It is a tool, and that tool is only as good as your skill at using it. It does open up the ability to spend more of your time in HEOR focused on strategy and a lot less on day-to-day tactical tasks.”

Integrating AI into HEOR

The generative and agentic functions used in HEOR are applications built on foundational AI models—large systems trained on enormous sets of data using self-supervised learning, which have the versatility to support a range of applications and the adaptability to perform tasks like text summarization and information extraction that are particularly useful for HEOR.5

In HEOR, “generative AI is increasingly used to synthesize complex literature, provide overviews of research topics, identify gaps, and support tasks like content organization and improving readability,” said Alina Helsloot, director of generative AI for scientific, technical, and medical journals at Elsevier, a global leader in advanced information and decision support for science and healthcare.

Axel Mühlbacher, PhD, a professor and researcher focused on health economics and healthcare management at Hochschule Neubrandenburg, in Germany, uses the technology to structure data he’s collected from patient interviews and to conduct literature searches, with the goal of highlighting population subsets and their healthcare decisions. With AI, he no longer needs research assistants to create final reports, and he can more efficiently develop fictional patient personas that represent specific populations.

“AI can summarize information with a speed we’ve never known before, and despite the critique that some of it might not be perfect, it’s hard to find somebody who could do a better job in the same amount of time, or even in 10 times the amount of time,” Mühlbacher said.

The HEOR teams that Hays oversees at Cardinal focus on real-world evidence, deploying large-language models from various vendors to seek answers to complex medical questions. Cardinal’s AI Center of Excellence tailors that technology by putting “wrappers” around the models for particular use cases, which adds context and specialization and enables the system to learn faster.8

“We have to stop thinking of AI as a replacement and start thinking of it as an augmentation to our workflows.”— Harlen Hays, MPH

Hays’s scientists use generative AI to review literature about patient populations, and his data engineering group uses it to scour structured and unstructured health records from community oncology settings (including doctors’ notes in patient charts) to find individuals who might benefit from certain treatments. Meanwhile, his data enablement team has assigned agentic AI to search aggregated, de-identified patient data for patterns that might be worth studying.

Despite AI’s impressive abilities, Hays cautioned, “you need the human-in-the-loop component to truly understand if results are accurate. We’ve had times in literature synthesis where the AIs fail and give us an erroneous therapy for a condition.”

Due to the autonomy of agentic AI, Hays added, researchers are needed to set boundaries and, when patient information is searched, to build in privacy and security measures. It’s also up to HEOR experts to discern whether patterns identified by agentic AI for potential study are reliable, he said. “Is it trying to find an answer to make me happy?”

Finally, Hays said, when agentic AI assists in drug discovery, humans are needed at the back end to formulate strategies for regulatory submissions, marketing plans, and reimbursement arguments.

“Over the next 5 to 10 years, AI will replace the redundant tasks that people in HEOR don’t want to do anyway,” he predicted. “Instead, humans will spend a lot more time putting context around findings.”

A Shift in Attention

AI is creating another kind of transformation within HEOR as it is integrated into medical devices and advanced systems for hospital management.

This has some HEOR professionals shifting their focus, leveraging the expanding pool of real-world patient data to keep pace with an increasing demand for data about the medical and economic value of AI-powered devices and organizational strategies.

One trend driving this shift involves the pairing of AI-driven software like Google DeepVariant with platforms for next-generation sequencing, such as Illumina NextSeq systems, in efforts by scientists to identify genetic mutations that drive disease.9,10

The focus is a bit different at GE HealthCare, where cloud AI and AI machine learning are being deployed to support the work of care teams, enhance outcomes for patients, and boost efficiency for health systems, said Parminder Bhatia, the company’s chief AI officer.

“AI can summarize information with a speed we’ve never known before, and despite the critique that some of it might not be perfect, it’s hard to find somebody who could do a better job in the same amount of time.”— Axel Mühlbacher, PhD

As of December 2025, GE HealthCare had received 115 AI-enabled medical device authorizations from the US Food and Drug Administration (FDA) to integrate AI into medical devices and processes.

Latest AI-capable and cloud technologies include:

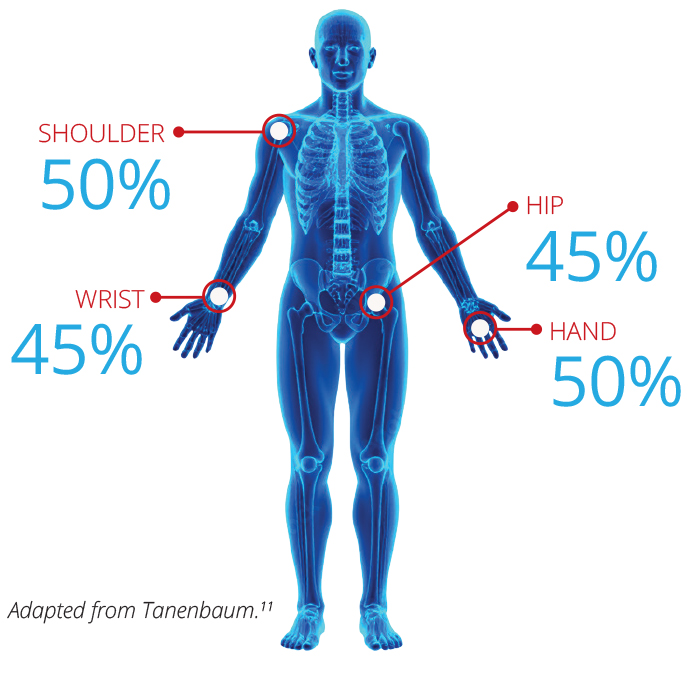

- AIR Recon DL, a deep learning application that enables magnetic resonance imaging machines to produce sharper images in up to half the time,11 increasing convenience for patients and efficiency for hospitals (Figure).

- Intelligent RT (iRT), which streamlines the multivendor, multistep process of managing radiation therapy workflows into a unified view, with early adopters seeing the time reduced from 7 days to 7 minutes to go from simulation to treatment planning with integration of iRT and RayStation by RaySearch Laboratories.12

- CareIntellect for Perinatal, a cloud-first application that aggregates data from multiple sources to support clinical decision making, speeding time to treatment, and improving maternal and fetal outcomes.13 “Every year, 700 women across the world die during labor and delivery, and 80% of that is preventable,”14 Bhatia said. “By streamlining monitoring, nurse efficiency in handing off patients, and documentation when every second counts, we’re moving from reactive to proactive.”

Figure: Percent reduction in exam times associated with use of AIR Recon DL

To improve healthcare operations, GE HealthCare offers Command Center, which streamlines patient movement through and out of the hospital. Within a year of adopting the AI-enabled software, The Queen’s Healthcare Systems in Hawaii had reduced its length of patient stay by more than a day, translating into $20 million in estimated savings and the ability to accept up to 100 new transfer cases per month, a 22% increase, Bhatia said.15

A human-in-the-loop component is built into these solutions, too, he said.

“One example is our ultrasound devices that integrate Caption Health AI technologies,” he said. “When taking a cardiac scan, these devices give sonographers a navigation system so they can get an image of good diagnostic quality, whether they have 1 year of experience or 15. But at the end of that process, a human makes decisions about the patient’s care.”16

New Potential, New Ethical Concerns

Despite its boundless promise, AI has vulnerabilities that present some ethical dilemmas—including its tendency to return “hallucinations,” or false findings. For instance, media outlets reported earlier this year that Elsa, the FDA’s generative AI tool for expediting the drug-approval process, was hallucinating studies that didn’t exist and misinterpreting research.17

In its research and health tools, Elsevier works to minimize hallucinations by ensuring that information picked up in AI searches is clearly linked to exact passages in original articles, book chapters, or other sources, Helsloot said. To accomplish this, the company employs human feedback, assigns large-language models to evaluate their AI “peers” that conduct searches, and stress-tests its systems to ferret out weaknesses.18

Meanwhile, Scopus AI—which enables users to search Elsevier’s immense database of published content—includes a tool that helps identify hallucinations by classifying its outputs based on its confidence level for each response’s relevance and completeness, while also leveraging thorough and constant manual human expert evaluation.18

AI works on probabilities, and mistakes happen when it assumes that one piece of information should follow another, Mühlbacher said.

“There is research showing that these hallucinations are embedded within the logic of AI,” he said. “And yet, it’s impossible to be in competition with somebody who is using the superpower of AI and not use it yourself.”

Hallucinations can hurt researchers by impugning their credibility and can harm patients by misrepresenting the likelihood of a positive or negative outcome, Hays said.

“What is the cost in healthcare if AI hallucinates that a patient was not eligible for a clinical trial when, in fact, they were?” he asked. “That’s where we get into the human-in-the-loop aspect, but if people have to review every chart again, did we actually help by using AI, or did we actually just create more work?”

Another concern about AI is the vast amount of energy it uses.19

“AI doesn’t sleep, it doesn’t take breaks, and it doesn’t take holidays,” Hays said. “At some point, are we going to create enough climate change or pollution that our work starts to negatively affect human health?”

To provide guidance about how to navigate these issues, the World Health Organization and ISPOR have published ethical frameworks around AI in healthcare and HEOR.20-22 But Hays suspects that enforceable, global regulatory frameworks will be needed to rein in AI’s energy consumption—and it won’t happen without industry buy-in.

“The big leaders in this area need to be having these conversations: Open AI, Google, AWS, Microsoft, Claude,” Hays said. “At some point, there will have to be regulation, but regulation is always way behind innovation.”

Science Fiction or Simply the Future?

With everything AI can do, its potential in healthcare seems boundless.

In the future, Hays imagines that agentic AI will be able to flag new studies as they are added to clinicaltrials.gov and make lists of patients who might be eligible. AI might even achieve truly personalized medicine, he said, by helping to devise individual treatment plans for people with rare conditions.23

In health technology assessment, AI may help regulatory panels use a more inclusive approach to drug approval decisions by creating personas that represent the patients who could benefit, Mühlbacher said.

“These decisions would normally be made by panels of about 6 people, usually older and predominantly male,” he said. “Can they really assess the benefits and risks for other populations, maybe female, maybe younger? My brain’s not big enough, but AI can do it.”

“Despite AI’s impressive abilities, you need the human-in-the-loop component to truly understand if results are accurate.”— Harlen Hays, MPH

Similarly, Mühlbacher said, when a patient’s eligibility for lifesaving drugs must be debated immediately, tumor boards might eventually be populated by AI personas rather than people.

But perhaps the most fantastical use of AI on the near horizon is the creation of digital twins, or virtual patients, which will incorporate a consenting individual’s personality and point of view, physique, lifestyle details, and medical history collected through health records and wearable devices.24,25 These twins will be able to represent patients at doctor’s appointments while their human counterparts stay at home, test potential treatments for an illness to determine which will work best, and even independently make medical decisions, Mühlbacher said.

Hays guesses that digital twins could also become crucial as controls in clinical trials, something Roche is already exploring.26,27 Deploying them to create synthetic control arms could allow retrospective data to virtually mature within a predictive model, he said; digital twins might also populate standard-of-care control arms in oncology clinical trials, for example, so that all participating humans can receive investigational drugs.

Those are exciting visions, Hays said, but they come with concerns.

“By allowing a patient’s data to mature into something different, which may or may not be true to life, we’d be introducing a lot of potential for error,” he said. “At what point is a digital twin no longer the patient? While we use digital twins in marketing all the time, the impact is smaller there. In healthcare, the repercussions of a false study are much higher, so we would have to add more vigor.”

“When applied responsibly and with human oversight, we support the use of AI tools by authors. However, AI is not a substitute for human critical thinking.”— Alina Helsloot

Mühlbacher wonders how much personal health information patients should be willing to share to create their digital twins, and what kinds of risks could ensue if those data were misused.

“Will a time come when AI is smarter than humans?” he asked. “What could happen, then, if humans were competing with AI for resources?”

Moving Ahead With AI

As HEOR professionals rely more and more on AI, they’ll need to understand how to ask it questions that will elicit their intended results.

In both generative and agentic AI, prompt engineering involves not only asking a question in the most effective way but also directing the technology to take on specific personas while searching for answers.28 “The result actually changes if the AI considers itself a computer engineer versus a researcher,” said Hays, whose company is providing training in the discipline for its employees.

To complicate matters, he said, identical questions and personas may elicit varying answers if different AI systems are used.

As a result, Hays said, HEOR professionals must be transparent in their published studies about the queries and AI versions they used, so that other scientists can seek to reproduce their results.

That’s just 1 of many checks and balances that Elsevier requires of authors who use AI in their published research and writing, Helsloot said.

“When applied responsibly and with human oversight, we support the use of AI tools by authors, including AI agents and deep research tools,” she said. “However, AI is not a substitute for human critical thinking, and authors are responsible and accountable for the content of the manuscript, including accountability for reviewing and editing content, ensuring privacy, and disclosing the use of AI upon submission.”

Learn More About HEOR and AI

In This Issue:

- Integrating Artificial Intelligence Into Systematic Literature Reviews: A Review of Health Technology Assessment Guidelines and Recommendations

- Harnessing Large Language Models in Health Economics and Outcomes Research: Overcoming the Hallucination Hazard

- From Concept to Commercialization: AI’s Emerging Role in HEOR

Value in Health Journal:

- Themed Section on HEOR and AI (November 2025)

- ISPOR Working Group Report on AI Taxonomy in HEOR (November 2025)

- ISPOR Working Group Report on Guidelines for Use of LLMs in HEOR (November 2025)

- ISPOR Working Group Report on AI for HTA (February 2025)

ISPOR Education:

- Streamlining Systematic Literature Reviews With Software and AI

- The Application of AI in Clinical Outcome Assessment Research

References:

- Roebuck C. AI in HEOR: Reflections on early innovations. Rxeconomics. Published March 19, 2025. Accessed November 18, 2025. https://www.rxeconomics.com/insights/ai-in-health-economics

- Roebuck MC, Liberman JN, Gemmill-Toyama M, Brennan TA. Medication adherence leads to lower health care use and costs despite increased drug spending. Health Affairs. 2011;30(1):91-99. doi:10.1377/hlthaff.2009.1087.

- Doupe P, Faghmous J, Basu S. Machine learning for health services researchers. Value Health. 2019;22(7):808-815.

- Fleurence RL, Wang X, Bian J, et al. A taxonomy of generative artificial intelligence in health economics and outcomes research: An ISPOR Working Group report. Value Health. 2025;28(11):1601-1610.

- Avalere Health. Contextualizing artificial intelligence for HEOR in 2023. Published November 1, 2025. Accessed November 18, 2025. https://advisory.avalerehealth.com/insights/contextualizing-artificial-intelligence-for-heor-in-2023

- Lee K, Paek H, Ofoegbu N, et al. A4SL: An agentic artificial intelligence-assisted systematic literature review framework to augment evidence synthesis for health economics and outcomes research and health technology assessment. Value Health. 2025;28(11):1655-1664.

- Chan K, Larson C, Valdes M. Nobel Prize in Chemistry honors 3 scientists who used AI to design proteins – life’s building blocks. AP. Published October 9, 2024. Accessed November 18, 2025. https://apnews.com/article/nobel-chemistry-prize-56f4d9e90591dfe7d9d840a8c8c9d553

- Cardinal Health. Inside Cardinal Health’s AI Center of Excellence. Published March 26, 2025. Accessed November 18, 2025. https://newsroom.cardinalhealth.com/Inside-Cardinal-Healths-AI-Center-of-Excellence

- Ultima Genomics. Ultima Genomics partners with Sentieon and Google DeepVariant to deliver high-performance variant calling. Published May 31, 2022. Accessed November 18, 2025. https://www.ultimagenomics.com/blog/ultima-genomics-partners-with-sentieon-and-google-deepvariant-to-deliver-high-performance/

- Elric G. Google’s DeepVariant: AI with precision healthcare. AI For Healthcare. Published May 20, 2025. Accessed November 18, 2025. https://aiforhealthtech.com/googles-deepvariant-ai-with-precision-healthcare/

- Tanenbaum L. Shorter exam times and more predictive patient scheduling with AIR Recon DL. GE HealthCare. Published 2021. Accessed December 2, 2025. https://www.gehealthcare.com/-/jssmedia/gehc/us/files/products/molecular-resonance imaging/air/airrecondltanenbaumsellsheetmijb03495xxglob.pdf

- GE HealthCare. GE HealthCare debuts AI-supported solution, designed to improve and shorten the radiation therapy workflow, at ASTRO 2025. Published September 25, 2025. Accessed December 2, 2025. https://www.gehealthcare.com/about/newsroom/press-releases/ge-healthcare-debuts-ai-supported-solution-designed-to-improve-and-shorten-the-radiation-therapy-workflow-at-astro-2025

- GE HealthCare. How digital perinatal solutions strengthen care consistency and continuity to improve maternal health outcomes. Published August 18, 2025. Accessed December 2, 2025. https://clinicalview.gehealthcare.com/infographic/how-digital-perinatal-solutions-strengthen-care-consistency-and-continuity-improve

- World Health Organization. Maternal mortality. Published April 7, 2025. Accessed November 18, 2025. https://www.who.int/news-room/fact-sheets/detail/maternal-mortality

- GE HealthCare. GE HealthCare collaborates with two major medical systems to advance AI technology designed to transform hospital operations and improve patient care. Published October 20, 2025. Accessed November 18, 2025. https://www.gehealthcare.com/about/newsroom/press-releases/ge-healthcare-collaborates-with-two-major-medical-systems-to-advance-ai-technology-designed-to-transform-hospital-operations-and-improve-patient-care

- GE HealthCare. GE HealthCare to acquire Caption Health, expanding ultrasound to support new users through FDA-cleared, AI-powered image guidance. Published February 9, 2023. Accessed November 18. 2025. https://www.gehealthcare.com/about/newsroom/press-releases/ge-healthcare-to-acquire-caption-health-expanding-ultrasound-to-support-new-users-through-fda-cleared-ai-powered-image-guidance-

- Shyrock T. FDA’s new AI tool “Elsa” faces accuracy concerns despite commissioner’s high hopes. Med Econ. Published July 23, 2025. Accessed December 2, 2025. https://www.medicaleconomics.com/view/fda-s-new-ai-tool-elsa-faces-accuracy-concerns-despite-commissioner-s-high-hopes

- Evans I. Preventing AI hallucinations in our research and health tools. Elsevier. Published February 24, 2025. Accessed November 18, 2025. https://www.elsevier.com/connect/preventing-ai-hallucinations-in-our-research-and-health-tools

- UN Environment Programme. AI has an environmental problem. Here’s what the world can do about that. Published November 13, 2025. Accessed November 18, 2025. https://www.unep.org/news-and-stories/story/ai-has-environmental-problem-heres-what-world-can-do-about

- World Health Organization. WHO issues first global report on Artificial Intelligence (AI) in health and six guiding principles for its design and use. Published June 28, 2021. Accessed November 18, 2025. https://www.who.int/news/item/28-06-2021-who-issues-first-global-report-on-ai-in-health-and-six-guiding-principles-for-its-design-and-use

- World Health Organization. WHO releases AI ethics and governance guidance for large multi-modal models. Published January 18, 2024. Accessed November 18, 2025. https://www.who.int/news/item/18-01-2024-who-releases-ai-ethics-and-governance-guidance-for-large-multi-modal-models

- Fleurence RL, Dawoud D, Bian J, et al. ELEVATE-GenAI: Reporting guidelines for the use of large language models in health economics and outcomes research: An ISPOR Working Group report. Value Health. 2025;28(11):1611-1625. doi: 10.1016/j.jval.2025.06.018.

- Karako K. Artificial intelligence applications in rare and intractable diseases: Advances, challenges, and future directions. Intractable Rare Dis Res. 2025;14(2):88-92. doi: 10.5582/irdr.2025.01030.

- Gabelein K. A patient twin to protect John’s heart. Siemens Healthineers. Published August 16, 2022. Accessed November 18, 2025. https://www.siemens-healthineers.com/perspectives/patient-twin-johns-heart

- Thangaraj PM, Benson SH, Oikonomou EK, Asselbergs FW, Khera R. Cardiovascular care with digital twin technology in the era of generative artificial intelligence. European Heart Journal. 2024;45(45):4808-4821. https://doi.org/10.1093/eurheartj/ehae619.

- Rodero C, Baptiste TMG, Barrows RK. A systematic review of cardiac in-silico clinical trials. Prog Biomed Eng. 2023;5(3):032004. doi: 10.1088/2516-1091/acdc71.

- Schmich F. Towards digital twins for clinical trials. PRISME Forum. Published May 21, 2024. Accessed November 18, 2025. https://prismeforum.org/wp-content/uploads/2024/05/PRISME-Forum-2024-Fabian-Schmich-Digital-Twins-1.pdf

- Shastri S. The art and discipline of prompt engineering. Communications of the ACM. Published October 27, 2025. Accessed November 18, 2025. https://cacm.acm.org/blogcacm/the-art-and-discipline-of-prompt-engineering/

Beth Fand Incollingo is a freelance writer who reports on scientific, medical, and university issues.