Harnessing Large Language Models in Health Economics and Outcomes Research: Overcoming the Hallucination Hazard

Manuel Cossio, MMed, MEng, Cytel Inc, Geneva, Switzerland, Universitat de Barcelona, Barcelona, Spain; Benjamin D. Bray MBChB, MD, Lane Clark & Peacock, London, United Kingdom

Introduction

There is a lot of interest in the potential for generative artificial intelligence (AI) to enable new capabilities or automate aspects of health economics and outcomes research (HEOR). Many tasks in HEOR, such as literature review, evidence synthesis, programming, adapting health economic models, and writing reports, are often manual and time-consuming. Generative AI can be a helpful partner, making some of the previous HEOR activities more manageable and efficient.

Large language models (LLMs) are designed to predict and generate plausible language, making them powerful tools for various applications. They work by predicting how likely a token, or a piece of text such as a word or part of a word, is to appear in a longer sequence of tokens. The term “large” in LLMs refers to the vast number of parameters they possess. For instance, Meta’s latest LLM, LLaMA 3.1, has 405 billion parameters—nearly 6 million times more than one of the first convolutional neural networks (CNNs) used for digit recognition, which had only 60,000 parameters.1 This immense scale allows LLMs to handle and generate high volumes of written data with remarkable versatility.

As with any new technology, the path to large-scale adoption is not straightforward. One of the critical challenges in using LLMs in HEOR is the risk of generating inaccurate or misleading information, often referred to as “hallucinations.” Hallucinations are instances where the model generates information that is not based on the input data or real-world facts. These hallucinations can be very plausible and hard to detect, potentially making LLMs risky or unreliable for specific tasks.

We believe, however, that hallucinations are a tractable problem, at least for many potential applications in HEOR. This guide aims to navigate these hazards, providing practical advice on hallucination mitigation tactics to allow LLMs to be used in a risk-proportionate way for HEOR activities.

The Mechanics of LLMs

LLMs are neural networks that utilize part of a specialized architecture known as transformers. Transformers are designed to process sequential data by leveraging self-attention mechanisms, which allow them to effectively capture and utilize context within the data.

Transformers offer 2 significant advantages over their predecessors, such as long short-term memory (LSTM) networks. First, transformers excel at managing longer text sequences. LSTM networks often struggled with lengthy sequences, frequently forgetting the initial words as the sequence grew longer. In contrast, transformers can handle extensive text without losing track of the context, making them far more effective for processing large volumes of text.2

One of the critical challenges in using LLMs in HEOR is the risk of generating inaccurate or misleading information, often referred to as “hallucinations.”

Second, transformers introduce the concept of attention, a game-changing feature in natural language processing. Attention mechanisms allow transformers to focus on the most critical parts of the input text, much like how humans read by paying varying levels of attention to different words. This ability to develop attention helps transformers understand and process the context and relationships between words more accurately.2

These 2 traits—handling long sequences and developing attention—make LLMs powerful for text handling, enabling them to generate coherent, contextually relevant, and insightful text across a wide range of applications.

Why LLMs Hallucinate

Hallucinations occur because LLMs, despite their advanced capabilities, do not truly understand the content or the semantic meaning of the content they process. Instead, they predict the next token in a sequence based on patterns learned from vast amounts of text data. This prediction process can sometimes lead to the generation of information that appears coherent but lacks factual basis.

To effectively assess and mitigate the risk of hallucinations in LLMs, thorough testing and analysis are crucial.

The transformer architecture that underpins LLMs contributes to their ability to generate fluent and contextually relevant text. However, it also means that the models can sometimes overfit to patterns in the training data, leading to the creation of spurious correlations and details. This is where the attention mechanism, while powerful, can also inadvertently emphasize irrelevant or incorrect aspects of the input text.

Key Factors Influencing Hallucinations

Several key factors can influence the propensity of LLMs to generate hallucinations.

First, model-specific factors play a crucial role. The specific architecture and training data of an LLM can significantly impact its likelihood of hallucinating. Some models, depending on their design and the quality of data they were trained on, may be more prone to generating incorrect information than others.

Second, task complexity is a major contributor. More complex or nuanced tasks can increase the chances of hallucinations. LLMs often struggle with processing intricate information or understanding subtle nuances, which can lead to errors in their outputs.

Last, the quality of prompts provided to LLMs is critical. The specificity and clarity of the prompts can greatly affect the accuracy of the model’s responses. Poorly constructed prompts can mislead the model, resulting in inaccurate or irrelevant outputs.

The Importance of Testing and Analysis

To effectively assess and mitigate the risk of hallucinations in LLMs, thorough testing and analysis are crucial. This process involves several key steps:

- Sample Testing: Conducting tests on a representative sample of tasks or prompts helps identify patterns of hallucinations. For example, when using an LLM to extract data from research papers in evidence synthesis, sample testing can reveal how accurately the model identifies and summarizes key findings.

- Error and Hallucination Tracking: Recording the types and frequency of errors and hallucinations encountered during testing is essential. This tracking helps in understanding how often the model generates incorrect information. For instance, if an LLM frequently misinterprets statistical data from clinical studies, this pattern needs to be documented.

- Error Analysis: Analyzing the specific types of errors and hallucinations provides insights into their underlying causes. By examining instances where the LLM incorrectly extracts data from a paper, researchers can identify whether the errors stem from complex language, ambiguous phrasing, or other factors.

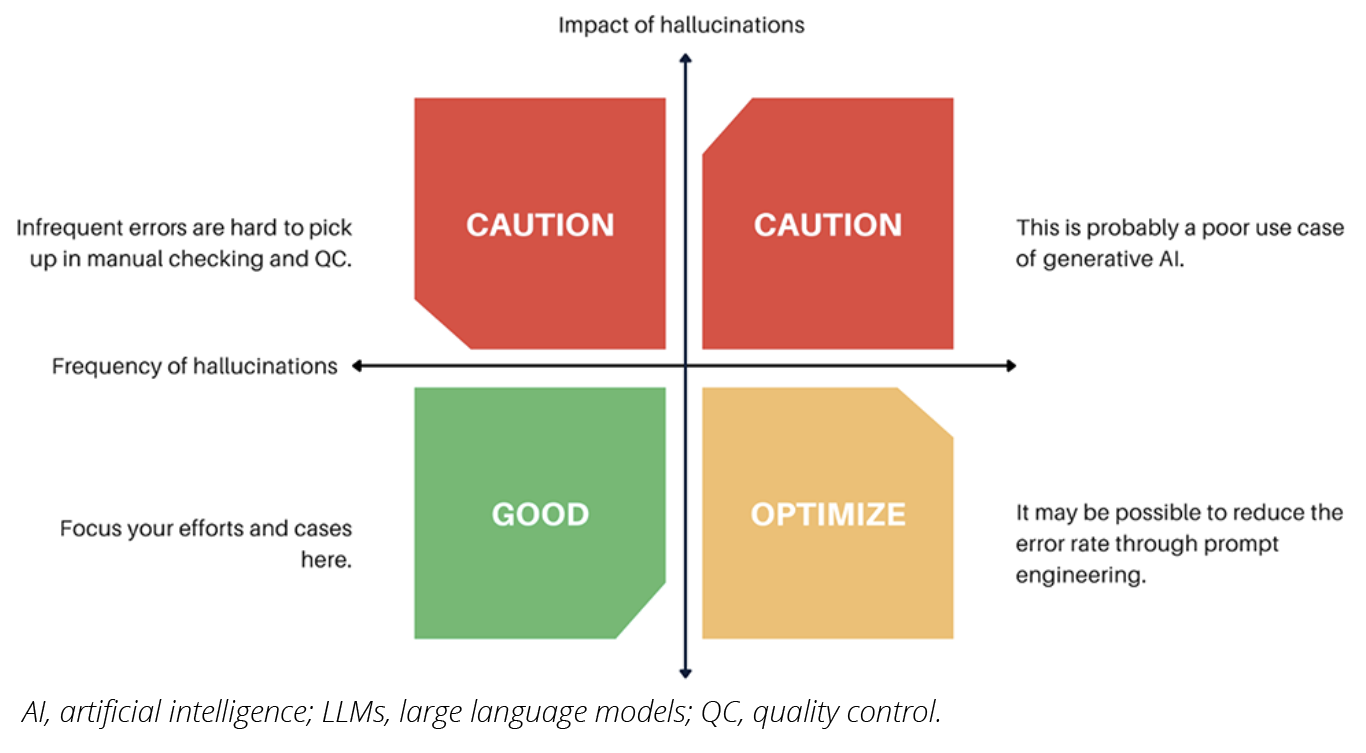

- Impact Assessment: Evaluating the severity and potential consequences of hallucinations is vital to determine their impact on the overall quality of the generated evidence. For example, consider a systematic literature review conducted using an LLM that incorrectly extracts data. If these flawed data are then used to inform health policy decisions, it could lead to ineffective or even harmful policies being implemented (Figure).

Figure. Case Scenarios of Hallucination Impact and Frequency in LLMs.

Strategies for Minimizing Hallucinations

To minimize hallucinations in LLMs, several strategies can be employed. A structured framework for assessing hallucination risk can help to guide decisions about LLM implementation and risk management (Figure). Human oversight is crucial; experts should carefully review and verify the evidence generated by LLMs, especially in high-stakes applications. Ensuring the quality and accuracy of the data used to train and input into LLMs is also essential for preventing hallucinations. Crafting clear, concise, and informative prompts through prompt engineering can help guide LLMs toward accurate and relevant responses. Retrieval augmented generation (RAG) is a technique that provides the LLM with additional factual information beyond its training data and can increase the accuracy of the LLM outputs.

Additionally, selecting models that have been shown to have a lower propensity for hallucinations in similar applications can reduce the risk of errors. Continuous monitoring, involving regular testing and evaluation of the LLM’s performance, is necessary to identify and address any emerging issues related to hallucinations. Quality control processes and quality management systems should be adapted to make sure that they provide appropriate checking and assurance of LLM-generated content. By implementing these strategies, the reliability and accuracy of LLM-generated outputs can be significantly improved.

Choosing the Right Model: The Last Strategy

The last strategy to minimize hallucination is to select models that excel in specific benchmarks. These benchmarks, typically detailed on the model developers’ websites, evaluate various aspects of a model’s performance. Key benchmarks to consider include massive multitask language understanding (MMLU), which assesses the model’s ability to handle a wide range of tasks accurately; discrete reasoning over paragraphs (DROP), which evaluates the model’s reasoning capabilities over paragraphs; GPQA-Diamond (general purpose question answering), which measures the accuracy of the model in answering questions correctly on the first attempt; and LongBench, which evaluates the model’s ability to handle tasks requiring long-context understanding. By selecting models that perform well in these benchmarks, researchers can reduce the risk of hallucinations and ensure the reliability of the model for tasks such as data extraction in HEOR. There are other benchmarks as well, and regular testing and monitoring of the model’s performance are crucial to maintain its accuracy and reliability over time.

Looking Forward

As the field of HEOR continues to evolve, the integration of LLMs offers unprecedented opportunities for innovation and efficiency. However, the potential for hallucinations underscores the need for careful implementation and oversight. By understanding the mechanisms behind LLMs, recognizing the factors that contribute to hallucinations, and employing robust testing and analysis, researchers can harness the power of these models while minimizing risks. Through strategic prompt engineering, continuous monitoring, and human oversight, the reliability and accuracy of LLM-generated outputs can be significantly enhanced. As we navigate this exciting frontier, a balanced approach that combines technological advancement with rigorous validation will be key to unlocking the full potential of LLMs in HEOR.

References:

- LeCun Y, Boser B, Denker JS, et al. Handwritten digit recognition with a back-propagation network. In: Touretzky DS, ed. Advances in Neural Information Processing Systems. Vol 2. Morgan Kaufmann; 1989:396-404.

- Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. In: Guyon I, Luxburg UV, Bengio S, et al, eds. Advances in Neural Information Processing Systems. Vol 30. Curran Associates Inc; 2017:5998-6008.